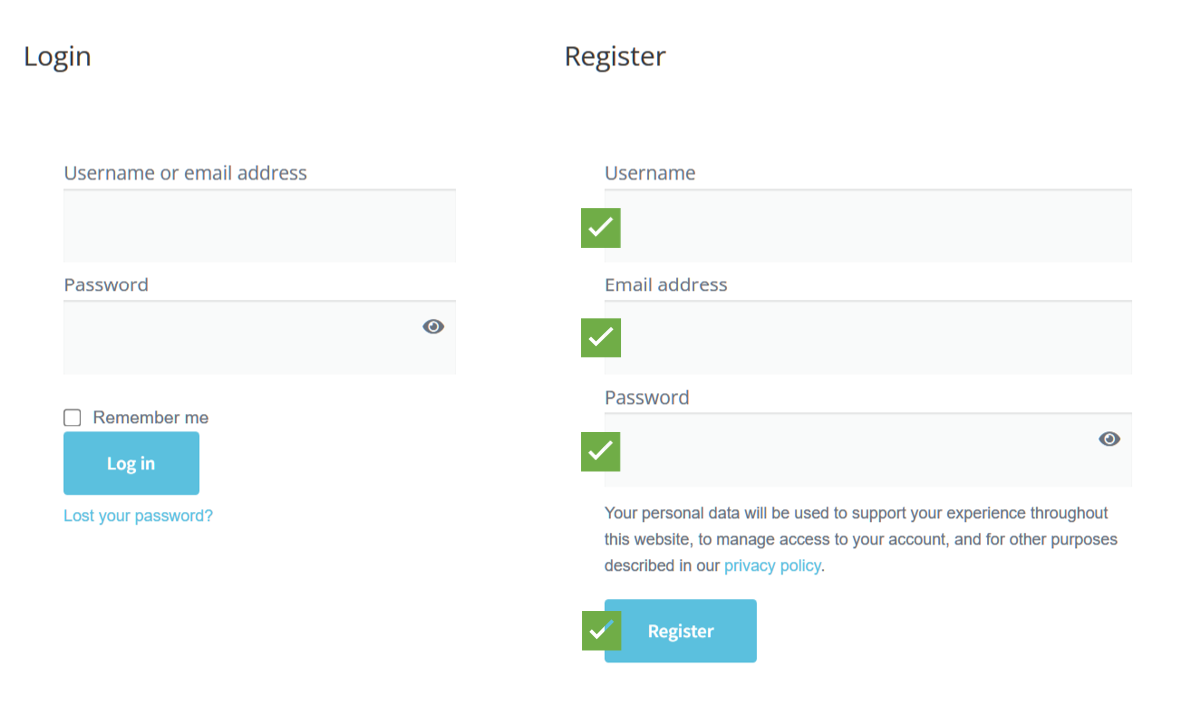

More than you might think, AI (Artificial Intelligence) and ML (Machine Learning) bots are crawling your site and scraping your content. They are collecting and using your data to train software like ChatGPT, OpenAI, DeepSeek, and thousands of other AI creations. Whether you or anyone approves of all this is not my concern for this post. The focus of this post is aimed at website owners who want to stop AI bots from crawling their web pages, as much as possible. To help people with this, I’ve been collecting data and researching AI bots for many months now, and have put together a “Mega Block List” to help stop AI bots from devouring your content.

The ultimate block list for stopping AI bots from crawling your site.

Contents

- Block AI Bots via robots.txt

- Block AI Bots via Apache/.htaccess

- Notes

- Changelog

- Disclaimer

- Show Support

- References

- Feedback

If you can edit a file, you can block a ton of AI bots.

Block AI Bots via robots.txt

The easiest way for most website owners to block AI bots, is to append the following list to their site’s robots.txt file. There are many resources explaining the robots.txt file, and I encourage anyone not familiar to take a few moments to learn more.

In a nutshell, the robots.txt file is a file that contains rules for bots to obey. So you can add rules that limit where bots can crawl, whether individual pages or the entire site. Once you have added some rules, simply upload the robots file to the public root directory of your website. For example, here is my robots.txt for Perishable Press.

To block AI bots via your site’s robots.txt file, append the following rules. Understand that bots are not required to obey robots.txt rules. Robots rules are merely suggestions. Good bots will follow the rules, bad bots will ignore the rules and do whatever they want. To force compliance, you can add blocking rules via Apache/.htaccess. That in mind, here are the robots rules to block AI bots..

Blocks over 400+ AI bots and user agents.

Block list for robots.txt

Before using, read the Notes and Disclaimer.

# Ultimate AI Block List v1.4 20250417

# https://perishablepress.com/ultimate-ai-block-list/

User-agent: .ai

User-agent: Agentic

User-agent: AI Article Writer

User-agent: AI Content Detector

User-agent: AI Dungeon

User-agent: AI Search Engine

User-agent: AI SEO Crawler

User-agent: AI Writer

User-agent: AI21 Labs

User-agent: AI2Bot

User-agent: AIBot

User-agent: AIMatrix

User-agent: AISearchBot

User-agent: AI Training

User-agent: AITraining

User-agent: Alexa

User-agent: Alpha AI

User-agent: AlphaAI

User-agent: Amazon Bedrock

User-agent: Amazon-Kendra

User-agent: Amazon Lex

User-agent: Amazon Comprehend

User-agent: Amazon Sagemaker

User-agent: Amazon Silk

User-agent: Amazon Textract

User-agent: AmazonBot

User-agent: Amelia

User-agent: AndersPinkBot

User-agent: Anthropic

User-agent: AnyPicker

User-agent: Anyword

User-agent: Aria Browse

User-agent: Articoolo

User-agent: Automated Writer

User-agent: AwarioRssBot

User-agent: AwarioSmartBot

User-agent: Azure

User-agent: BardBot

User-agent: Brave Leo

User-agent: ByteDance

User-agent: Bytespider

User-agent: CatBoost

User-agent: CC-Crawler

User-agent: CCBot

User-agent: ChatGLM

User-agent: Chinchilla

User-agent: Claude

User-agent: ClearScope

User-agent: Cohere

User-agent: Common Crawl

User-agent: CommonCrawl

User-agent: Content Harmony

User-agent: Content King

User-agent: Content Optimizer

User-agent: Content Samurai

User-agent: ContentAtScale

User-agent: ContentBot

User-agent: Contentedge

User-agent: Conversion AI

User-agent: Copilot

User-agent: CopyAI

User-agent: Copymatic

User-agent: Copyscape

User-agent: Cotoyogi

User-agent: CrawlQ AI

User-agent: Crawlspace

User-agent: Crew AI

User-agent: CrewAI

User-agent: DALL-E

User-agent: DataForSeoBot

User-agent: DataProvider

User-agent: DeepAI

User-agent: DeepL

User-agent: DeepMind

User-agent: DeepSeek

User-agent: Diffbot

User-agent: Doubao AI

User-agent: DuckAssistBot

User-agent: FacebookBot

User-agent: FacebookExternalHit

User-agent: Falcon

User-agent: Firecrawl

User-agent: Flyriver

User-agent: Frase AI

User-agent: FriendlyCrawler

User-agent: Gemini

User-agent: Gemma

User-agent: GenAI

User-agent: Genspark

User-agent: GLM

User-agent: Goose

User-agent: GPT

User-agent: Grammarly

User-agent: Grendizer

User-agent: Grok

User-agent: GT Bot

User-agent: GTBot

User-agent: Hemingway Editor

User-agent: Hugging Face

User-agent: Hypotenuse AI

User-agent: iaskspider

User-agent: ICC-Crawler

User-agent: ImageGen

User-agent: ImagesiftBot

User-agent: img2dataset

User-agent: INK Editor

User-agent: INKforall

User-agent: IntelliSeek

User-agent: Inferkit

User-agent: ISSCyberRiskCrawler

User-agent: JasperAI

User-agent: Kafkai

User-agent: Kangaroo

User-agent: Keyword Density AI

User-agent: Knowledge

User-agent: KomoBot

User-agent: LLaMA

User-agent: LLMs

User-agent: magpie-crawler

User-agent: MarketMuse

User-agent: Meltwater

User-agent: Meta AI

User-agent: Meta-AI

User-agent: Meta-External

User-agent: MetaAI

User-agent: MetaTagBot

User-agent: Mistral

User-agent: Narrative

User-agent: NeevaBot

User-agent: Neural Text

User-agent: NeuralSEO

User-agent: Nova Act

User-agent: OAI-SearchBot

User-agent: Omgili

User-agent: Open AI

User-agent: OpenAI

User-agent: OpenBot

User-agent: OpenText AI

User-agent: Operator

User-agent: Outwrite

User-agent: Page Analyzer AI

User-agent: PanguBot

User-agent: Paperlibot

User-agent: Paraphraser.io

User-agent: Perplexity

User-agent: PetalBot

User-agent: Phindbot

User-agent: PiplBot

User-agent: ProWritingAid

User-agent: QuillBot

User-agent: RobotSpider

User-agent: Rytr

User-agent: SaplingAI

User-agent: Scalenut

User-agent: Scraper

User-agent: Scrapy

User-agent: ScriptBook

User-agent: SEO Content Machine

User-agent: SEO Robot

User-agent: Sentibot

User-agent: Sidetrade

User-agent: Simplified AI

User-agent: Sitefinity

User-agent: Skydancer

User-agent: SlickWrite

User-agent: Sonic

User-agent: Spin Rewriter

User-agent: Spinbot

User-agent: Stability

User-agent: StableDiffusionBot

User-agent: Sudowrite

User-agent: Super Agent

User-agent: Surfer AI

User-agent: Text Blaze

User-agent: TextCortex

User-agent: The Knowledge AI

User-agent: Timpibot

User-agent: Vidnami AI

User-agent: Webzio

User-agent: Whisper

User-agent: WordAI

User-agent: Wordtune

User-agent: WormsGTP

User-agent: WPBot

User-agent: Writecream

User-agent: WriterZen

User-agent: Writescope

User-agent: Writesonic

User-agent: xAI

User-agent: xBot

User-agent: YouBot

User-agent: Zero GTP

User-agent: Zerochat

User-agent: Zhipu

User-agent: Zimm

Disallow: /Block AI Bots via Apache/.htaccess

To actually enforce the “Ultimate AI Block List”, you can add the following rules to your Apache configuration or main .htaccess file. Like many others, I’ve written extensively on Apache and .htaccess. So if you’re unfamiliar, there are plenty of great resources, including my book .htaccess made easy.

In a nutshell, you can add rules via Apache/.htaccess to customize the functionality of your website. For example, you can add directives that help control traffic, optimize caching, improve performance, and even block bad bots. And these rules operate at the server level. So while bots may ignore rules added via robots.txt, they can’t ignore rules added via Apache/.htaccess (unless they falsify their user agent).

To block AI bots via Apache/.htaccess, add the following rules to either your server configuration file, or add to the main (public root) .htaccess file. Before making any changes, be on the safe side and make a backup of your files. Just in case something unexpected happens, you can easily roll back. That in mind, here are the Apache rules to block AI bots..

Blocks over 400+ AI bots and user agents.

Block list for Apache/.htaccess

Before using, read the Notes and Disclaimer.

# Ultimate AI Block List v1.4 20250417

# https://perishablepress.com/ultimate-ai-block-list/

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} (.ai |Agentic|AI Article Writer|AI Content Detector|AI Dungeon|AI Search Engine|AI SEO Crawler|AI Writer|AI21 Labs|AI2Bot|AIBot|AIMatrix|AISearchBot|AI Training|AITraining|Alexa|Alpha AI|AlphaAI|Amazon Bedrock|Amazon-Kendra) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (Amazon Lex|Amazon Comprehend|Amazon Sagemaker|Amazon Silk|Amazon Textract|AmazonBot|Amelia|AndersPinkBot|Anthropic|AnyPicker|Anyword|Aria Browse|Articoolo|Automated Writer|AwarioRssBot|AwarioSmartBot|Azure|BardBot|Brave Leo|ByteDance) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (Bytespider|CatBoost|CC-Crawler|CCBot|ChatGLM|Chinchilla|Claude|ClearScope|Cohere|Common Crawl|CommonCrawl|Content Harmony|Content King|Content Optimizer|Content Samurai|ContentAtScale|ContentBot|Contentedge|Conversion AI|Copilot) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (CopyAI|Copymatic|Copyscape|Cotoyogi|CrawlQ AI|Crawlspace|Crew AI|CrewAI|DALL-E|DataForSeoBot|DataProvider|DeepAI|DeepL|DeepMind|DeepSeek|Diffbot|Doubao AI|DuckAssistBot|FacebookBot|FacebookExternalHit) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (Falcon|Firecrawl|Flyriver|Frase AI|FriendlyCrawler|Gemini|Gemma|GenAI|Genspark|GLM|Goose|GPT|Grammarly|Grendizer|Grok|GT Bot|GTBot|Hemingway Editor|Hugging Face|Hypotenuse AI) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (iaskspider|ICC-Crawler|ImageGen|ImagesiftBot|img2dataset|INK Editor|INKforall|IntelliSeek|Inferkit|ISSCyberRiskCrawler|JasperAI|Kafkai|Kangaroo|Keyword Density AI|Knowledge|KomoBot|LLaMA|LLMs|magpie-crawler|MarketMuse) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (Meltwater|Meta AI|Meta-AI|Meta-External|MetaAI|MetaTagBot|Mistral|Narrative|NeevaBot|Neural Text|NeuralSEO|Nova Act|OAI-SearchBot|Omgili|Open AI|OpenAI|OpenBot|OpenText AI|Operator|Outwrite) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (Page Analyzer AI|PanguBot|Paperlibot|Paraphraser.io|Perplexity|PetalBot|Phindbot|PiplBot|ProWritingAid|QuillBot|RobotSpider|Rytr|SaplingAI|Scalenut|Scraper|Scrapy|ScriptBook|SEO Content Machine|SEO Robot|Sentibot) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (Sidetrade|Simplified AI|Sitefinity|Skydancer|SlickWrite|Sonic|Spin Rewriter|Spinbot|Stability|StableDiffusionBot|Sudowrite|Super Agent|Surfer AI|Text Blaze|TextCortex|The Knowledge AI|Timpibot|Vidnami AI|Webzio|Whisper) [NC,OR]

RewriteCond %{HTTP_USER_AGENT} (WordAI|Wordtune|WormsGTP|WPBot|Writecream|WriterZen|Writescope|Writesonic|xAI|xBot|YouBot|Zero GTP|Zerochat|Zhipu|Zimm) [NC]

RewriteRule (.*) - [F,L]

</IfModule>Notes

Note: The two block lists above (robots.txt and Apache/.htaccess) are synchronized and include/block the same AI bots.

Note: Numerous user agents are omitted from the block lists because the names are matched in wild-card fashion. Here is a list showing wild-card blocked AI bots.

Note: The block lists focus on AI-related bots. Some of those bots are used by giant corporations like Amazon and Facebook. So please keep this in mind and feel free to remove any bots that you think should be allowed access to your site. Also be sure to check the list of wild-card blocked AI bots.

Note: Both block lists are case-insensitive. The robots.txt rules are case-insensitive by default, and the Apache rules are case-insensitive due to the inclusion of the [NC] flag. So don’t worry about mixed-case bot names, their user agents will be blocked, whether uppercase, lowercase, or mIxeD cAsE.

Changelog

v1.4 – 2025/04/17

- Removes Applebot

- Removes all 2 Bing agents

- Removes all 4 Google agents

- Changes PerplexityBot to Perplexity

- Adds: Azure, Falcon, Genspark, GLM, ImageGen, Knowledge, LLMs, Nova Act, Operator, Sitefinity, Sonic, Super Agent, Zhipu

Note: If you don’t care about search results, you can restore the removed blocks for Google, Bing, and Apple:

User-agent: Applebot

User-agent: BingAI

User-agent: Bingbot-chat

User-agent: Google Bard AI

User-agent: Google-CloudVertexBot

User-agent: Google-Extended

User-agent: GoogleOtherPrevious versions

- Version 1.3 – 2025/03/10 – Adds more AI bots, refines list to make better use of wild-card pattern matching of user-agent names.

- Version 1.2 – 2025/02/12 – Adds 73 AI bots (Thanks to Robert DeVore)

- Version 1.1 – 2025/02/11 – Replaces

REQUEST_URIwithHTTP_USER_AGENT - Version 1.0 – 2025/02/11 – Initial release.

Disclaimer

The information shared on this page is provided “as-is”, with the intention of helping people protect their sites against AI bots. The two block lists (robots.txt and Apache/.htaccess) are open-source and free to use and modify without condition. By using either block list, you assume all risk and responsibility for anything that happens. So use wisely, test thoroughly, and enjoy the benefits of my work 🙂

Support my work

I spend countless hours digging through server logs, researching user agents, and compiling block lists to stop AI and other unwanted bots. I share my work freely with the hope that it will help make the Web a more secure place for everyone.

If you benefit from my work and want to show support, please make a donation or buy one of my books, such as .htaccess made easy. You’ll get a complete guide to .htaccess and a ton of awesome techniques for optimizing and securing your site.

Of course, tweets, likes, links, and shares also are super helpful and very much appreciated. Your generous support enables me to continue developing AI block lists and other awesome resources for the community. Thank you kindly 🙂

References

Thanks to the following resources for sharing their work with identifying and blocking AI bots.

- Dark Visitors ? Agents

- Blockin’ bots.

- Block the Bots that Feed AI Models by Scraping Your Website

- Go ahead and block AI web crawlers

- I’m blocking AI-crawlers

- GitHub ? Tina Ponting’s AI Robots + Scrapers

- GitHub ? Robert DeVore’s Block AI Crawlers

- GitHub ? ai.robots.txt

- Overview of OpenAI Crawlers

- How to stop your data from being used for AI training

- How to Block OpenAI ChatGPT From Using Your Website Content

- AI haters build tarpits to trap and trick AI scrapers

- Block AI Bots from Crawling Websites Using Robots.txt

- Blocking AI web crawlers

- Understanding the Bots Blocked by AI Scrape Protect

- How to Block Bad Bots

- Perishable Press ? Apache Archive

- Perishable Press ? .htaccess Archive

- Perishable Press ? Blacklist Archive

- Perishable Press ? Bots Archive

- Perishable Press ? nG Firewall Archive

Feedback

Got more? Leave a comment below with your favorite AI bots to block. Or send privately via my contact form. Cheers! 🙂